Down the Rabbit Hole

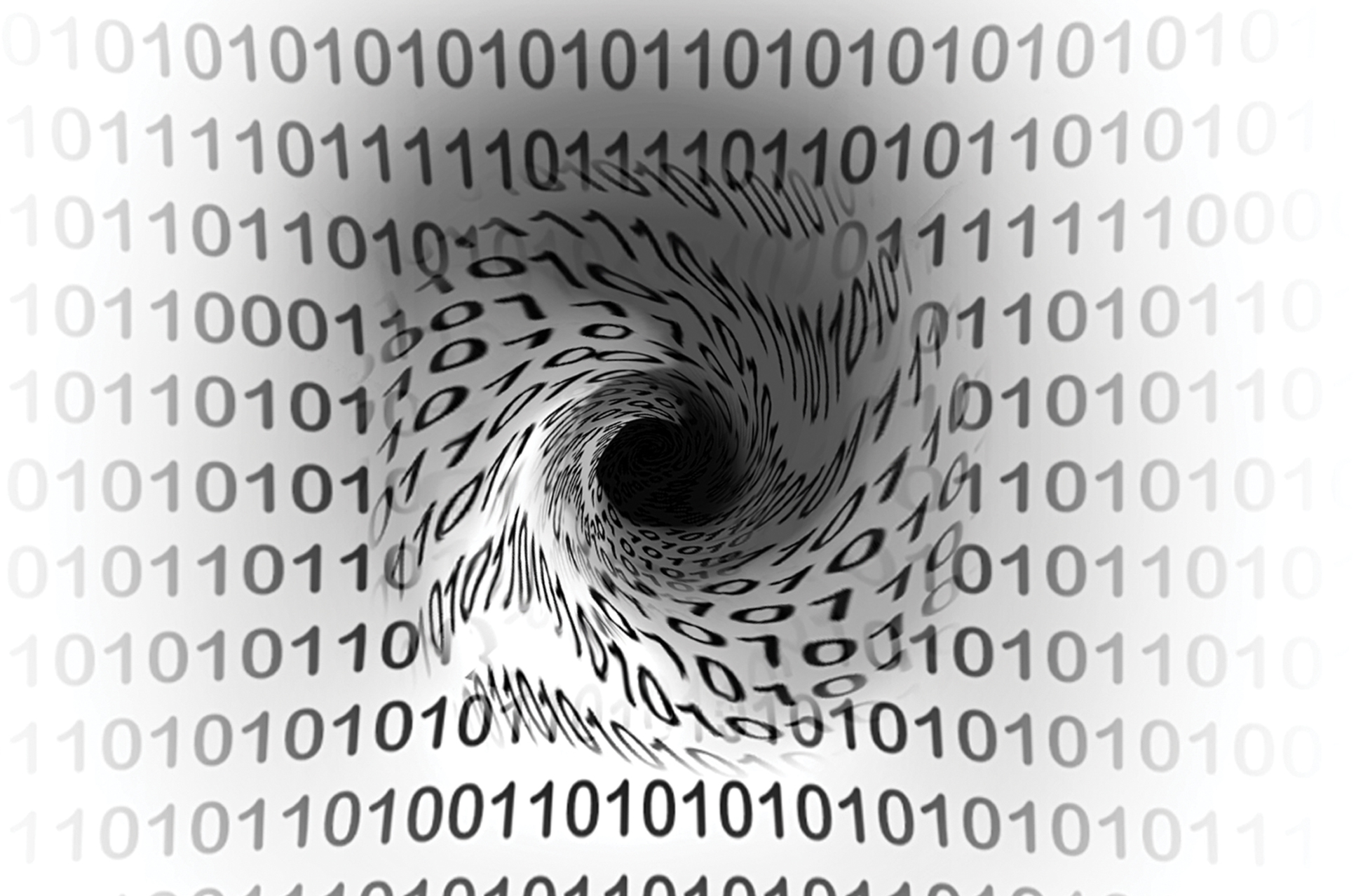

Advanced data analytics and artificial intelligence models are a game changer for the property and casualty insurance industry.

These sophisticated technologies are being used across the insurance value chain to reduce claims administration costs, to segment risks into granular classes of business, and to enhance the customer experience, but underwriters and claims adjusters, in particular, may be at a crossroads.

What if the analyses and models conflict with the knowledge and experience of seasoned underwriting and claims professionals? In other words, what if people across the enterprise question the findings of the advanced analytics and AI models and decide to overlook or override the insights presented?

A 2019 study found insurance companies globally spend about $20 billion annually on advanced analytics.

To achieve the right balance between the insights of machines and people, the raw data must be beyond reproach.

If data are inaccurate, an insurer could wind up unknowingly taking on risky business or avoiding profitable accounts.

Read our sidebar, The Data Dictionary

Obviously, the capital invested by the industry in data science would be undermined, if not squandered, if the results were disregarded. And according to a 2019 study by Swiss Re, insurance companies globally spend about $20 billion annually on advanced analytics.

Dave North, president and CEO of third-party claims administrator Sedgwick, offers the example of a skeptical claims adjuster questioning the validity of a model producing insights on claims severity. “If the analytics doesn’t match the person’s experience handling such claims in the past,” North says, “the claims adjuster may suppress the guidance, defeating the intended purpose of the model.”

On one hand, the adjuster’s decision to dismiss the analysis may be the proper course of action—a “red flag” alerting the data science team that the model’s findings appear to be erroneous. Upon further review, the data scientists may discover the outcome has been adulterated by inaccurate data—the oft-cited “garbage in, garbage out” effect.

On the other hand, the outcome actually may be a more precise evaluation of high-severity claims than the adjuster’s experience and gut instinct. If the person overrides the findings, a claim that could have been moderated through earlier intervention will end up costing more.

The challenge is finding the right balance between the insights of machines and people—encouraging claims and underwriting professionals to trust the analytics and AI models by ensuring the raw data and the algorithms that crunch this information into insights are beyond reproach. “We’re at a tipping point,” North says, “where the data analytics are becoming more robust, yet people still have trouble trusting the findings. If you don’t trust the data, you won’t learn from it.”

How Accurate Are They?

“There is definitely an error rate, but the important question is not is the AI model 100% accurate but is it more accurate on a relative basis than the traditional manual processing of data,” says Sean Ringsted, chief digital officer at Chubb. “The answer is absolutely.”

Ringsted makes an excellent point: machines do the same thing every time, whereas people are prone to human error. But since humans provide a significant percentage of the data that AI models tally up and calculate, human errors and inherent subjectivities may find their way into the machine, slanting the analysis and undermining the impact of decisions based on the findings.

A 2019 survey of more than 1,100 C-level executives and finance professionals discovered a high rate of human errors in financial and accounting data affecting the quality of CEO decisions. “Nearly seven in 10 CEOs had made a significant business decision based on out-of-date or incorrect financial data, an alarming finding,” says Marc Huffman, president and chief operating officer at finance and accounting automation software provider BlackLine, which commissioned the independent survey.

The high error rate was attributed by the survey respondents to the already huge and fast-growing volume of financial data flowing into companies. Accountants manually closing the books to report the financial statement needed to make sense of the data. As the reporting deadline neared, their error rate increased. Companies that transitioned to automated systems were less at risk of making mistakes.

Something similar is at play in the insurance industry, as underwriters and claims specialists transition from the manual collection and assessment of data to a reliance on the data insights generated by advanced analytics.

“Whether you’re using the latest analytical tools or doing things the old-fashioned way, you still need to reconcile the data, looking back historically five or 10 years to interpret the relevance of data from yesterday’s world to today’s world,” says Ringsted. “The difference is that AI tools provide a more fine-tuned analysis at a lower error rate.”

Ellen Carney, principal analyst for insurance at Forrester Research, echoes this opinion. “Advanced analytics are a lubricant for the insurance industry, offering a way to make faster and better use of data, generating insights that enhance decisions across the value chain—assuming the data is accurate, the tools are accurate, more so than people relying on gut instinct,” she says.

The Effects of Bad Data

But automated systems are not a panacea. Dick Lavey, president of agency markets at The Hanover Insurance Group, recalls an automated risk-modeling tool lifting third-party data to populate the fields in a business owners policy for a restaurant. The model had incorrectly incorporated data that suggested the restaurant had a delivery service when, in fact, it did not, altering its risk profile. “This could mean we accept an account that perhaps we should not,” Lavey says, “or vice versa.”

In other words, if the data informing a model are inaccurate, the decisions drawn from the model’s findings could result in an insurer’s taking on risky business or avoiding what otherwise would be profitable business.

Jim Tyo shares this perspective. “Inaccurate data within the insurance industry has significant risks, much more than other types of industries,” says Tyo, the chief data officer at Nationwide. Tyo says regulatory violations, for example, could come with heavy fines, a compromised reputation, and a damaged bottom line.

Bad data also have a substantial impact on consumers purchasing insurance products, Tyo says, since the premiums are based on modeled data the insurer believes are accurate. He further notes the impact of inaccurate data on a carrier’s loss-reserving activity, which is based on the cost of risk per policyholder.

In such cases, it would be prudent for seasoned insurance professionals to question the findings as opposed to blindly following the guidance. “The tools should not preclude human judgment,” North says. Should the findings conflict with past experience, he says, “claims adjusters must have the ability to jump in.”

Ringsted says second opinion is always a good thing. “People and machines must work hand in glove,” he says. “The analytics must complement the work of underwriters and claims specialists.”

He maintains there is “nothing inherently wrong” with knowledgeable people questioning the output of a model. “One thousand florists in Kansas may be found to share very similar property or liability risks, but there is always going to be the one florist that is an outlier,” Ringsted says. “That’s when an underwriter’s gut instincts can be invaluable. But you also don’t want them systemically overriding the findings. The goal is to combine data science and human intuition in such a way that one discipline does not overrule the other.”

Building Trust

Focusing on the accuracy of data could be a way to generate trust among the users of AI models. As Henna Karna, chief digital officer at AXA XL, puts it, “If the models aren’t trusted, people won’t use them.”

Other industry data scientists concur that data quality is the foundation for building user trust. “This industry has always been consumed with the need to ensure accurate data, the raw material of our products,” says Mano Mannoochahr, the chief data and analytics officer at Travelers.

What’s different is that data are now collected from myriad internal and third-party sources in much greater volumes and then digitized and analyzed using sophisticated analytics and AI models. “While these tools present more accurate insights, they depend upon the quality of the data input into them,” he says. “Consequently, the industry’s long-standing effort to ensure data quality cannot diminish.”

How are insurers going about these efforts? Sandeep Haridas, vice president and manager of underwriting strategy and excellence at Liberty Mutual, says the insurer addresses the integrity of model outcomes by having a team of tenured data scientists who understand the business collaborate with underwriters in building the models.

“By engaging both parties in curating the data, you build confidence in the models and trust in the findings, reducing the chance that someone will dispute an outcome and go with their gut,” Haridas says.

That approach is standard across the industry.

“Advanced data analytics doesn’t exist in a back office somewhere,” says Karna. “Models are human-driven and collaborative by nature, people in underwriting and claims working closely with data scientists to develop models reflecting what the adjuster or underwriter is going to use the tool for.”

Ringsted agrees. “You don’t build models in a vacuum,” he says. “Data sets are tediously built, first by hand and then introduced into the model, making the analytics better over time.”

Ringsted stresses that the purpose of the model needs to be clear, “which requires the collaboration of two disciplines: data scientists and experts in underwriting or claims.”

At The Hanover, underwriters or agents must verify all third-party data before it is pre-filled into a model by the insurer’s data scientists. “We won’t add derived third-party data into our models unless we’re sure of the accuracy,” Lavey says.

To do this, third-party vendors are required to provide a proof of concept verifying the accuracy of their data, which are then compared to internal account data. Lavey calls the process “a good starting point” from which analysts will scrutinize data and, conceivably, identify discrepancies. “We still control the output,” he says.

Several internal teams at Nationwide are tasked with ensuring accurate and high-quality data, such as its Enterprise Data Office and Enterprise Analytics Office, among others. Working jointly with relevant business professionals, Tyo says, the organizations undertake a thorough review of data reliability, definitions and documentation to ensure “accuracy, completeness and taxonomy.”

At Chubb, data analysts do an initial review of data, including cross-checking key fields against different sources. When reviewing a client’s revenue figures, analysts routinely cross-check the data with figures provided by Dun & Bradstreet.

“After this initial fact check, we evaluate trends in the data,” Ringsted says. “Large companies, for instance, will have more general liability claims. Therefore, as a client’s revenue increases, we should correspondingly see an increase in claim frequency.”

Throughout the process, Chubb’s data analysts work closely with the underwriters. “We want to be sure the data conforms to their expectations,” Ringsted says. “If it doesn’t, that’s a problem.”

Assuming these various internal controls verify and validate the use of high-quality data, the possibility that underwriters and claims specialists will go with their gut should decrease. To further minimize this possibility, Travelers has introduced a traffic light system of red, yellow and green indicators specifically directing when an underwriter must accept the findings of a model and when they can override this guidance.

Mannoochahr provides the example of a property insurance policy renewal. “Typically, the underwriter might order an inspection to assess the property exposures, a process that consumes several days,” he says. “The analytics may suggest no need to order the inspection, represented by a green flag. A yellow flag gives the underwriter the opportunity to override the model and order the risk control. A red flag strongly encourages the person to do that.”

By separating the guidance into categories, underwriters are provided leeway to tap into their knowledge and experience. This “right touch” strategy, as Mannoochahr calls it, is being employed in property underwriting at present and will migrate to workers compensation underwriting this year. “We’re nudging our people to trust the ‘machine’ is working,” he says, creating “harmony between automation and appropriate oversight and intervention by humans.”

A similar process for building trust is under way at Penn National. “We’re always looking for ways to eliminate the possibility that someone will question if our model outcomes are correct,” says Britta Schatz, the chief information officer.

Schatz cites a model her team developed to identify claims that offered the possibility of collecting subrogation from another insurer, minimizing the company’s overall loss exposure. Previously, the insurer left this determination up to individual claims adjusters.

“The model suggested that more than a few subrogation opportunities were being squandered,” Schatz says. “The adjusters had not identified these claims for subrogation or had inadvertently overlooked them. It was a learning experience. When apprised of the model outcomes, what might have been a trust issue disappeared.”

All the insurers are cognizant that the millions of dollars invested in advanced analytics and AI models will be wasted unless users trust the findings. To enhance human trust, and thus buy-in, the insurers have elevated the need for their employees to understand and value the crucial importance of accurate data collection, processing, dissemination and analysis. In this regard, Travelers has created an illustrated graphic titled “Data Knowledge Map,” depicting how the company selects risks, issues a policy, pays claims and serves people. “All of this is predicated on data and analytics,” Mannoochahr says. The insurer is rolling out the map across the enterprise, he says, “so that everyone appreciates their crucial role in capturing the right data correctly. Our profits depend upon it.”